Introduction to FLEET: Federated Learning Testbed

FLEETOverview

Federated Learning (FL) is a revolutionary approach to training machine learning models where the data never leaves the devices, ensuring data privacy and security. However, real-world deployment of FL faces challenges such as:

- System and network heterogeneity

- Communication delays

- Non-IID data distributions

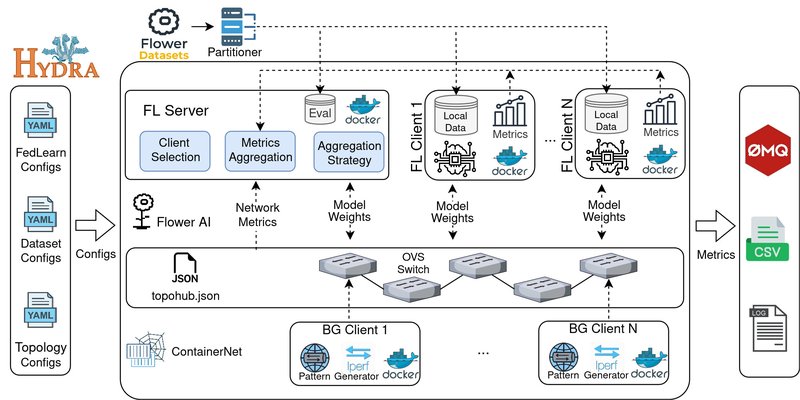

To address these challenges, FLEET (Federated Learning Emulation and Evaluation Testbed) was developed. FLEET is a powerful tool that bridges the gap between FL simulations and real-world deployments by providing a controlled environment for replicating realistic conditions.

FLEET integrates key technologies:

- Flower: A framework-agnostic FL library.

- Containernet: A Docker-based network emulator.

- Hydra: A configuration management system for easy experimentation.

FLEET enables researchers and developers to:

- Evaluate FL algorithms under real-world network conditions.

- Simulate client and server resource limitations.

- Add background network traffic to study its effects.

Why Use FLEET?

FLEET makes it easy to study the interplay between FL algorithms, network conditions, and system constraints. Key features include:

- Network Emulation: Simulate realistic bandwidth, latency, packet loss, and topologies.

- Resource Control: Limit CPU/memory for each FL client/server.

- Configuration Management: Use Hydra to define datasets, models, and network settings.

- Background Traffic: Add congestion and interference through built-in traffic generators.

- Real-time Metrics: Monitor accuracy, loss, CPU, memory, bandwidth usage, and more.

Whether you're a researcher exploring FL strategies or a developer building FL-based applications, FLEET provides a comprehensive environment to test your ideas.

Quick Start: Running Your First Experiment

In this section, we'll walk through the steps to set up and run a basic FLEET experiment.

Prerequisites

To use FLEET, ensure you have the following installed:

- Python 3.10+

- Docker (for containerized clients and servers)

- Open vSwitch (for network emulation)

- A Unix-based OS (tested on Ubuntu 22.04)

1. Cloning the Repository

Start by cloning the FLEET repository:

git clone https://github.com/oabuhamdan/fleet.git

cd fleet

2. Automatic Setup (Recommended)

Run the setup script to install dependencies, configure the environment, and build Docker images:

bash config.sh

This script will:

- Create a Python virtual environment.

- Install Python dependencies.

- Build Docker images for FL clients/servers.

- Set up Containernet for network emulation.

Note: The script checks for prerequisites like Python version, Docker, and Open vSwitch. If any are missing, you'll need to install them manually.

3. Running Your First Experiment

Once the setup is complete, you can run a default experiment using the provided configuration.

sudo .venv/bin/python main.py

What happens?

- FLEET initializes the emulated network using Containernet.

- Docker containers are created for the FL server and clients.

- The CIFAR-10 dataset is downloaded and partitioned for federated learning.

- The Containernet interactive CLI is launched.

4. Starting the Training Workflow

From the Containernet CLI, start the FL experiment:

containernet> py net.start_experiment()

This command: - Pings all FL clients to ensure network connectivity. - Starts the FL server and client training process.

5. Monitoring Logs and Results

After the experiment runs, logs and metrics can be found in the static/logs/ directory. Metrics include:

- Training Metrics: Accuracy, loss, and round times.

- System Metrics: CPU/memory usage for each client.

Key Takeaways

- FLEET provides a realistic testbed for evaluating FL algorithms.

- The setup is simple with the provided

config.shscript. - The default experiment demonstrates how to emulate FL under controlled conditions.

What’s Next?

In the next blog post, we’ll dive deeper into Hydra configuration, explaining how to customize datasets, models, and experiment settings. Stay tuned!

Read Next: Building Your First Experiment with Hydra