Managing Datasets and Partitioning in FLEET

FLEETWelcome to the third post in our FLEET tutorial series! 🚀 Today, we’ll focus on managing datasets and partitioning data for federated learning experiments. Datasets are a critical component of FL experiments, and FLEET's integration with Flower Datasets and HuggingFace Datasets makes this process seamless.

We'll explore:

- The dataset preparation process in FLEET.

- How to configure IID and non-IID partitioning.

- How server evaluation works and its requirements.

- Different configuration options available in FLEET.

- Tips for using datasets from HuggingFace.

Overview

Federated learning experiments often require datasets to be partitioned across multiple clients. FLEET simplifies this process through Hydra-based configurations, while leveraging partitioners from Flower. You can also adapt the dataset to support server evaluation (if applicable) and modify partitioning strategies to simulate realistic FL scenarios.

Step 1: Choose the dataset

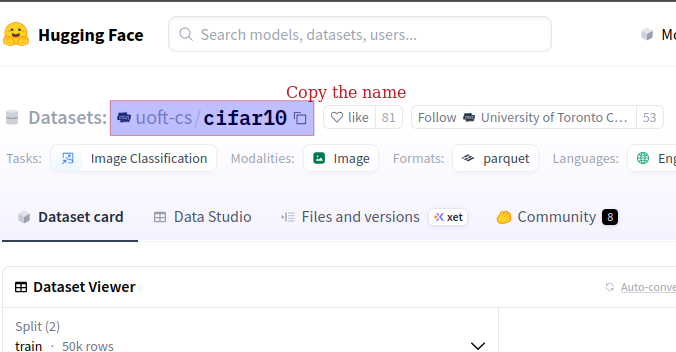

To choose the dataset, go to Hugging Face Datasets Hub and search for your dataset by name. You will pass that names to the dataset parameter of FederatedDataset. Note that the name is case-sensitive.

Note that once the dataset is available on HuggingFace Hub it can be immediately used in Flower Datasets (no approval from Flower team is needed, no custom code needed).

Here is how it looks for CIFAR10 dataset.

--

Step 2: Dataset Preparation in FLEET

In FLEET, the dataset preparation process is automatically handled in the main.py script. This centralizes the setup and ensures that the dataset is ready before the experiment begins.

The preparation process involves:

- Downloading the dataset (if not already downloaded).

- Applying the specified partitioning strategy.

- Splitting the dataset into train and test partitions.

Here’s how the preparation process is triggered in the script:

from common.dataset_utils import prepare_datasets

prepare_datasets(cfg.dataset)

This function reads the dataset configuration (cfg.dataset) and applies all relevant parameters (like partitioning strategy and test split size). No need to create the dataset configuration object manually, Hydra takes care of it.

Step 3: Partitioning Strategies and Configurations

FLEET integrates multiple partitioning strategies provided by Flower. By default, the partitioning strategy is set to IID, but you can configure non-IID partitioning as well, such as Dirichlet or Shard-based. Each partitioner has its own configurable parameters, which must be specified in the partitioner_kwargs field.

Supported Partitioners in Flower

Here are some examples of partitioners supported by Flower:

IidPartitioner: Distributes data equally and randomly across clients.DirichletPartitioner: Distributes data based on a Dirichlet distribution, controlled by analphaparameter.ShardPartitioner: Divides data into shards and assigns a specific number of shards to each client.

Note: Each partitioner has its own set of parameters. To understand these parameters and how to use them, and for the full set of available partitioners, refer to the Flower Partitioners Documentation.

Example: Configuring Dirichlet Partitioning

To use the DirichletPartitioner, you can update the dataset configuration in static/config/dataset/default.yaml:

partitioner_cls_name: "DirichletPartitioner"

partitioner_kwargs:

alpha: 0.5 # Controls the degree of non-IID-ness (lower values = higher non-IID-ness)

This will distribute data unevenly across clients, simulating real-world heterogeneity in federated learning.

Step 4: Server Evaluation and Dataset Preparation

Server evaluation allows the FL server to evaluate the global model on a test dataset. This feature is controlled by the server_eval parameter in the server configuration (static/config/fl_server/default.yaml):

server_eval: true

When server_eval is enabled:

- The test split of the dataset is saved to a separate directory (

server_eval). - The server can use this test split to evaluate the global model after each training round.

Datasets Without a Test Split

Some datasets (e.g., custom datasets or specific HuggingFace datasets) may not have a predefined test split. In such cases, if server_eval is enabled, FLEET will log a warning and skip server evaluation.

Step 5: Configuring Datasets in FLEET

The dataset configuration (static/config/dataset/default.yaml) includes the following fields:

path: "static/data" # Directory where datasets are stored

name: "cifar10" # Dataset name (e.g., cifar10, imdb)

partitioner_cls_name: "IidPartitioner" # Type of partitioning

partitioner_kwargs: {} # Parameters for the partitioner

force_create: false # Recreate dataset if true

test_size: 0.2 # Proportion of test data

server_eval: true # Enable server evaluation

train_split_key: "train" # Key for training data split

test_split_key: "test" # Key for test data split

Explanation of Key Fields

path: Directory where datasets are saved.name: Name of the dataset to load. Supported datasets include Flower datasets and HuggingFace datasets.partitioner_cls_name: The partitioning strategy (e.g., IidPartitioner, DirichletPartitioner).partitioner_kwargs: Additional parameters for the partitioner.force_create: Iftrue, the dataset will be recreated even if it already exists.test_size: Proportion of data to reserve for the test split (only applies if no test split is provided).train_split_key/test_split_key: Keys used to access training and test data splits.

Step 6: Using Proper Train and Test Keys

FLEET supports datasets from HuggingFace, but it's important to ensure that you use the correct keys for the train and test splits. These keys vary between datasets and can be found on the HuggingFace dataset page.

For example, the IMDB dataset has the following splits:

- Train: "train"

- Test: "test"

Make sure to update the dataset configuration accordingly:

train_split_key: "train"

test_split_key: "test"

Key Takeaways

- FLEET handles dataset preparation automatically in the

main.pyscript. - Flower provides several partitioning strategies (e.g., IID, Dirichlet, Shard-based). Check the Flower Documentation for details.

- Server evaluation requires a test split and is controlled by the

server_evalparameter in the server configuration. - Some datasets may lack a test split—FLEET will skip server evaluation in such cases.

- Configure the correct keys for train/test splits when using HuggingFace datasets.

Next Steps

In the next post, we’ll dive into network emulation and topology—how FLEET simulates realistic network conditions for FL experiments.

Stay tuned! 🎉

Previous: Building Your First Experiment with Hydra

Next: